- Jul 29, 2025

- 6 min read

Bypassing Facial Recognition—How to Detect Deepfakes and Other Fraud

In this article, we explain how fraudsters bypass facial verification and provide insights into choosing the most reliable anti-fraud solutions.

Since the dawn of facial biometric verification, fraudsters have been looking for ways to bypass it—from simple paper masks to sophisticated deepfake technology.

According to Sumsub’s Q1 2025 fraud trends research, deepfake fraud surged by 1100% and synthetic identity document fraud rose by over 300% in the United States only. Europol's Internet Organised Crime Threat Assessment (IOCTA) also recently highlighted a significant surge in AI-driven crimes, including deepfakes, which are increasingly utilized by cybercriminals to enhance the scale and sophistication of their operations. Synthetic media is rapidly becoming a weapon of choice for fraudsters targeting financial institutions.

However, deepfake technology isn't just limited to financial fraud. In a recent case in Colorado, prosecutors claim a dentist accused of poisoning his wife asked his daughter to fabricate a deepfake video of his wife asking for deadly chemicals to support his version of events. This underscores why liveness detection and advanced biometric facial recognition systems are crucial, not just for catching scammers, but for defending against the most extreme forms of deception.

With increased regulatory scrutiny and deepfake fraud trends showing that even minor weaknesses in facial biometrics can be exploited at scale, this article explores how bad actors bypass biometric face recognition and what companies can do to stay ahead of fraudsters.

Method 1: Spoofing

“Spoofing” describes the use of fraudulent facial imagery to bypass biometric authentication. There is a wide range of technologies fraudsters can use to help them do this. Some of the most primitive ones include silicone masks—which, surprisingly, have been able to fool big tech companies over the years. However, nowadays, fraudsters are getting into more advanced forms of spoofing, including deepfakes. Let’s dive into each method.

Using pictures

In the era of social media, fraudsters can obtain almost anyone’s picture and use it to fool face verification. Therefore, if facial biometric technology does not analyze certain characteristics of an image, fraudsters can simply use social media images to hack devices and accounts.

Fraudsters can also use a similar method to gain access to people’s bank accounts. For example, back in 2023, a 34-year-old thief in Brazil even managed to get access to several accounts and apply for loans by placing customer photos over a dummy.

Using video

You’d think that, if a verification technology asks users to make random movements like winking or blinking, it’s impossible to trick the system. Unfortunately, this isn’t really the case, as movements can be recorded in advance—and some verification systems fail to recognize such pre-recorded videos.

Using masks

There are silicone masks so realistic that it’s impossible to detect when a fraudster wears one. In 2019, criminals used this method to impersonate the French Defense Minister and were able to steal €55 million. They did so by phoning heads-of-states, wealthy businessmen, and large charities via Skype, and claiming that they needed money to save people kidnapped by terrorists.

Even recently in China, the criminal use of lifelike silicone masks has raised new concerns about identity theft. Hyper-realistic masks, readily available on local e-marketplaces, are being marketed with claims they can fool facial recognition systems, enabling users to impersonate others in high-security or workplace environments.

However, silicone masks can only really work if biometric technologies do not scan for subtle signs of life, like skin texture and blood flow. For this reason, in 2025, an increasing number of fraudsters are turning to AI-driven tools for more sophisticated deception.

Using deepfakes

Deepfakes use machine learning to either generate a fake persona or impersonate an existing person. Deepfakes are now cheap, fast, and dangerously effective. Scammers can manipulate existing video or generate fake personas using AI. One common attack is face swapping a victim’s photo onto a video that mimics liveness cues like blinking and nodding.

In June 2025, Vietnamese authorities dismantled a 14‑person criminal ring that allegedly laundered VND 1 trillion (about US $38.4 million) by deploying AI-generated face biometrics to bypass facial recognition systems at banks.

Even themed attacks are rising—our Halloween deepfake report showed spikes in fraud using seasonal filters and altered identities to bypass verification during onboarding promotions.

Since just about anyone can create a deepfake at little to no cost with free generators, in theory, it’s easy to face-swap someone to gain access to their account. However, efficient deepfake detection technology can recognize deepfakes by analyzing artifacts on the provided image.

Suggested read: What Are Deepfakes, and How Can You Spot Them?

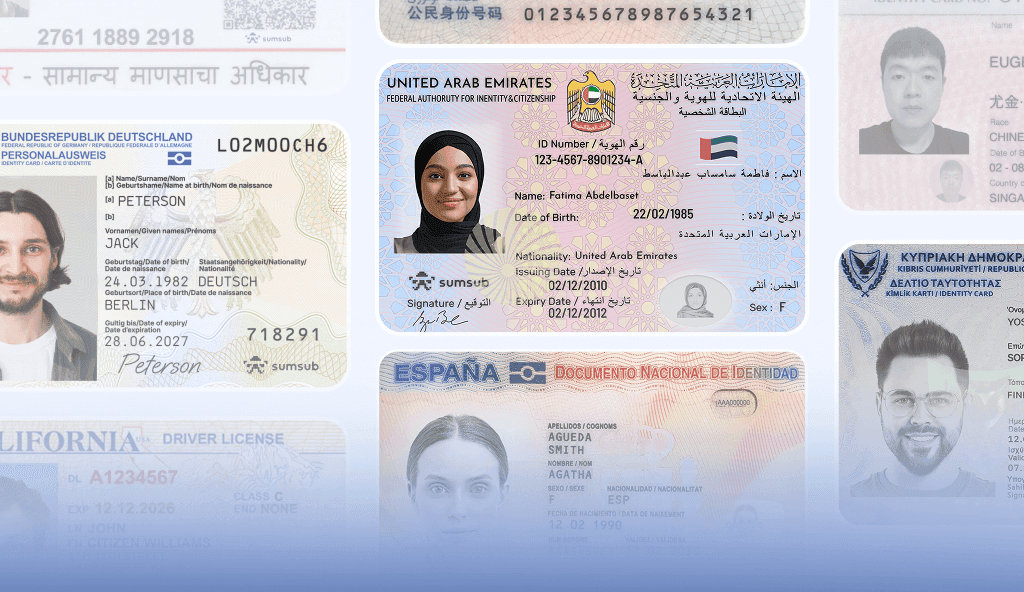

Using synthetic faces

Fraudsters can now generate completely new “people” with AI to help create synthetic identities. These don’t rely on stolen photos or videos. Instead, they’re built using AI generators that combine facial elements to make someone who doesn’t exist. Synthetic faces are hard to flag, especially if paired with fake documents.

Method 2: Bypassing

While spoofing impersonates a person, bypassing targets the system itself. Fraudsters can even manipulate biometric data to trick verification systems without showing a face at all.

Injection attacks

Injection attacks bypass liveness detection by feeding fake biometric data directly into an app or API, rather than using the camera. Fraudsters can hijack the camera stream with synthetic or pre-recorded footage, tamper with the app using emulators or rooted devices, or manipulate the API by sending generated or replayed biometric data.

These attacks often succeed when systems lack strong endpoint security or client integrity checks. To defend against them, advanced liveness solutions implement secure enclaves, anti-tampering SDKs, and encrypted pipelines that ensure the authenticity of both the device and the data.

Relay attacks

Relay attacks occur when a real person performs the required liveness actions, but the interaction is relayed remotely from a different location to trick the biometric system. For instance, a fraudster might socially engineer a victim into a video call and secretly route that video to the liveness check.

These attacks are particularly effective against systems that don’t validate the origin of the camera input or track geolocation. Robust defenses against relay fraud include session validation, IP and device fingerprinting, geofencing, and behavioral or contextual analysis to ensure the user is both live and local.

Liveness systems are not equally vulnerable, nor are they equally strong. Liveness systems are only as reliable as their weakest link: typically the user’s device, the transmission layer, or the backend server. Each layer must be secured to prevent spoofing, relay attacks, or tampering.

Suggested read: Fraud Detection and Prevention—Best Practices for 2025

What is facial recognition and how does it work?

Biometric facial recognition is a technology that identifies or verifies individuals by analyzing their facial features. It works by mapping key biometric markers, such as the distance between eyes, and comparing them with a stored facial template.

Facial recognition vs. facial verification

There is, however, a distinction between facial recognition and facial verification. Facial recognition typically scans faces in public or private databases to identify individuals. In contrast, facial verification confirms a person’s identity by matching a real-time image against a specific record.

Is facial recognition safe?

Face biometrics are not foolproof. Without robust anti-fraud mechanisms like liveness detection, these systems can be fooled by spoofed images, pre-recorded videos, or AI-generated synthetic faces. This, as well as privacy obligations, makes it critical for businesses to understand how their biometric facial recognition systems work and what their vulnerabilities are.

Where is facial recognition used today?

Today, biometric face recognition systems are embedded in smartphones, used at airport gates, deployed by banks and fintech platforms, and even in gaming services and dating apps.

How liveness detection prevents biometric fraud

A reliable biometric facial recognition system helps make sure the user is real. An AI-powered liveness check analyzes facial biometrics for subtle signals of life, including natural light reflection, micro-movements, depth cues, and consistent geometry.

Crypto exchange Bitget, for example, used Sumsub’s biometric face recognition system to stop hundreds of deepfake onboarding attempts. Liveness detection flagged AI-generated fraud attempts by spotting unnatural textures, inconsistent lighting, and missing micro-movements. With over 25 million users, Bitget uses liveness checks and face biometrics as an essential part of minimizing fraud in onboarding at scale, achieving 99% accuracy even as deepfake attacks surged by 217% in the crypto sector.

A strong biometric facial recognition system doesn’t just “see” a face—it checks for signs of life and authenticity.

Liveness detection analyzes facial biometrics such as:

- Natural light reflection in the eyes

- Micro-movements (like pulse in the cheeks)

- Skin texture and depth

- Consistent geometry from multiple angles.

These checks catch pre-recorded videos, synthetic overlays, and even advanced deepfakes that mimic real expressions.

Tips on choosing hacker-resistant liveness

When selecting a biometric verification solution, companies should ensure that it protects against both spoofing and bypassing. Above all, it should differentiate between real faces and artificial objects, like deepfakes or pre-recorded videos. To do so, the solution must analyze parameters such as:

- Image depth

- Eye reflections

- Skin texture

- Blood flow

Face recognition can be performed within the verification suite’s interface, which is embeddable into other apps. It can be done via webcam, smartphone, and other camera-equipped devices. Usually, all you need to do is ask the user to move their head around while the system takes a number of photos (instead of a video) and analyzes each one for signs of spoofing.

The technology can be tested as follows:

- Present a static image to the system

- Try to pass verification with eyes closed

- Use a face-spoofing prop, such as a mask, a deepfake, or a video

Any reliable technology should detect these fraud attempts.

Depending on the use case, companies may opt for passive liveness detection (which works without user interaction) or active liveness (which involves prompts like turning your head or blinking). Passive methods are generally more frictionless, while active methods can offer an added layer of security in high-risk scenarios.

It's also important to choose a solution that has passed independent evaluations, such as ISO/IEC 30107-3 Level 2 or 3 compliance, which test resistance to advanced spoofing and presentation attacks.

Don’t forget to ask solution providers about the data encryption mechanisms they employ, which should be state-of-the-art and resistant to relay or man-in-the-middle attacks.

Ultimately, the right liveness detection tool should combine security, usability, and technical resilience. Crucially, it must keep pace with evolving fraud methods by continuously updating its algorithms, adapting its solution architecture, and leveraging the latest AI advancements.

If you're evaluating facial recognition system providers, focus on real-world performance. Any reputable provider should be able to demonstrate how their system holds up against deepfakes, synthetic identities, and spoofing attacks under pressure.

When evaluating facial recognition solutions, prioritize providers that offer passive liveness detection, multi-angle capture, and protection against bypassing methods like injection and relay attacks. Make sure data is encrypted and tested under real-world attack conditions.

-

How does facial recognition work step by step?

Facial recognition captures a person’s face using a camera, then analyzes unique facial features like the distance between eyes and cheekbone shape to create a biometric template. This template is compared to a database to identify or verify the individual.

-

Can deepfakes fool face recognition systems?

Yes, sophisticated deepfakes can sometimes bypass basic facial recognition by mimicking facial features and movements. However, advanced systems with liveness detection and artifact analysis can detect inconsistencies that reveal deepfakes.

-

Is facial recognition safe for identity verification?

Facial recognition is generally safe when combined with strong security measures like liveness detection, encryption, and anti-spoofing techniques. Without these, fraudsters can exploit vulnerabilities using photos, videos, or deepfakes.

-

What’s the difference between face recognition and verification?

Face recognition identifies or matches a face against a large database to find a person’s identity, while face verification confirms if a face matches a specific identity.

-

How does liveness detection work in facial recognition?

Liveness detection analyzes real-time signs of life such as blinking, subtle facial movements, skin texture, and light reflections to ensure the face belongs to a live person rather than a photo, video, or deepfake.

Relevant articles

- Article

- 4 days ago

- 10 min read

AI-powered romance scams are rising fast. Learn how dating fraud works and how platforms and users can protect themselves from online deception.

- Article

- 1 week ago

- 4 min read

Discover January 2026's biggest fraud cases and cybercrime investigations. From billion-dollar scam busts to global money laundering takedowns—get th…

What is Sumsub anyway?

Not everyone loves compliance—but we do. Sumsub helps businesses verify users, prevent fraud, and meet regulatory requirements anywhere in the world, without compromises. From neobanks to mobility apps, we make sure honest users get in, and bad actors stay out.