- Jan 15, 2026

- 8 min read

AI Fake IDs and the New KYC Risk

AI-generated fake IDs are bypassing traditional KYC: learn why businesses need to rethink their identity verification in 2026.

AI-generated fake IDs are already breaking traditional KYC systems. What once required fine-tuned skills and significant resources can now be done for as little as $15 and half an hour using generative AI, which allows fraudsters to bypass legacy identity verification controls at scale. As a result, businesses face higher fraud losses, compliance risk, and regulatory exposure.

According to the Sumsub 2025 Identity Fraud Report, AI-generated documents are a major fraud trend. In 2025, 2% of all detected fake documents were created using generative AI tools such as ChatGPT, Grok, and Gemini (this statistic covers a six-month period only). Competition among major technology companies continues to accelerate advances in AI, and this trend is therefore expected to grow rapidly, potentially expanding the scale and impact of AI-driven identity fraud in the near future.

Among the most widely discussed examples is OnlyFake, a service that illustrates how powerful—and dangerous—generative AI can be when applied to identity fraud. Using advanced AI technologies, it produced highly realistic counterfeit driver’s licenses and passports. In reported cases, these fake IDs were then used to successfully pass Know Your Customer (KYC) checks on several cryptocurrency exchanges.

This article explores what AI fake IDs are, why some KYC providers fail to detect them, and how modern identity verification systems are evolving to stop them—especially in regulated environments.

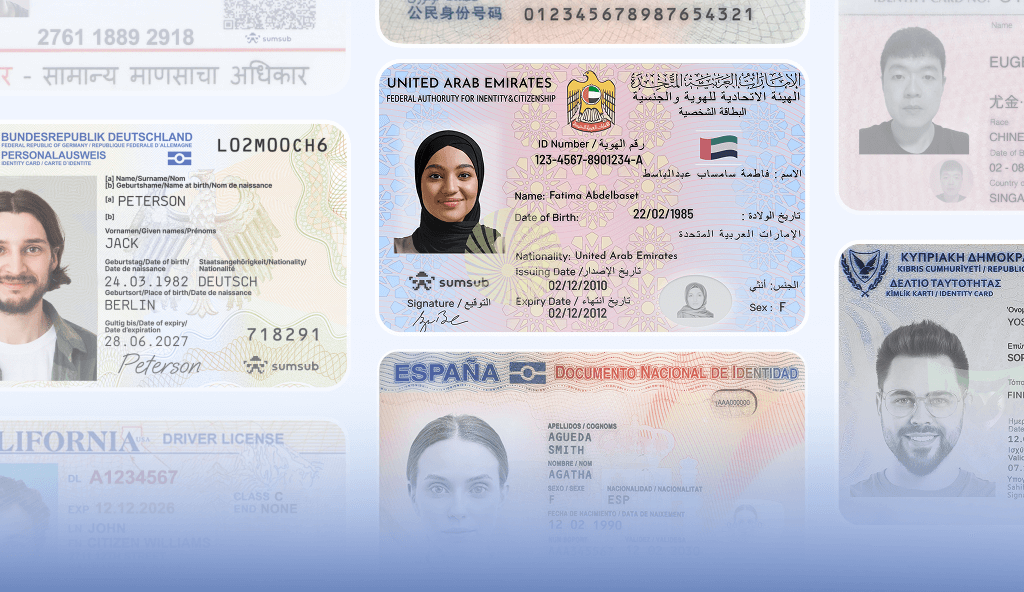

What is an AI fake ID?

An AI fake ID is a digitally generated identity document—such as a passport, national ID card, or driver’s license—created using machine learning models. These systems combine realistic templates, synthetic portraits, text generation, and image enhancement to produce documents that look authentic at first glance.

Unlike older fake IDs that relied on obvious Photoshop artifacts, AI fake IDs are generated end-to-end. Fonts, hologram patterns, shadows, facial proportions, and background textures are all synthesized in ways that closely mimic real government-issued documents.

OnlyFake and the likes

Underground online service OnlyFake gained widespread recognition for providing “instant” fake ID images that could pass basic online verification checks. Investigative reporting shows that fake driver’s licenses and passports generated by OnlyFake were successfully used to bypass KYC checks on major cryptocurrency exchanges and were reportedly shared by users as accepted on platforms such as Kraken, among others. The service sold these AI‑generated fake IDs for as little as $15 each, and could generate dozens or even hundreds at once using spreadsheet uploads, which dramatically lowered the cost and time required to obtain convincing fraudulent credentials.

OnlyFake is not the only service enabling AI-assisted identity fraud. Threat Hunter and other cybersecurity researchers have uncovered underground marketplaces and services that go further: they offer full KYC bypass solutions using AI-generated facial videos, forged documents, and stolen data. These marketplaces combine AI, document forgery, and real stolen identity data to help fraudsters pass verification on multiple platforms, including crypto exchanges, digital wallets, and merchant accounts.

Both types of services highlight the same core problems:

- Many digital onboarding flows still rely on static image uploads of identity documents

- Weak KYC and identity verification systems can be easily tricked by realistic AI-generated or synthetic identities

The significance of OnlyFake and the likes is not about the tools. It shows how easily AI can now replicate trust signals that were once difficult to forge.

What are the dangers of AI-generated fake IDs?

A while ago, the US Treasury’s Financial Crimes Enforcement Network (FinCEN) issued an alert confirming that criminals have used generative AI to alter or fully generate ID images and, in some cases, successfully open accounts using these fake identities. There are also documented cases in Italy where criminals used AI to open bank accounts and digital wallets.

Once opened, these accounts are typically used to receive and launder proceeds from online scams, including check fraud, credit card fraud, authorized push payment fraud, and loan fraud.

AI-generated fake IDs facilitate KYC fraud by allowing criminals to bypass onboarding checks and create synthetic identities at scale. After onboarding, these accounts can be exploited for money laundering, loan fraud, mule networks, and payment abuse—often across multiple platforms at the same time.

This significantly increases money laundering risk, as illicit funds can move through accounts that appear fully verified. If a fake identity passes onboarding through a legacy, non-technology-driven KYC process, it can enable money laundering to continue for a long period, unless suspicious activity is detected at another control layer, such as transaction monitoring or behavioral analysis.

Prolonged undetected fraud and money laundering also carry severe consequences: regulated entities face the risk of substantial regulatory fines, while non-regulated platforms may suffer significant financial losses and, in some cases, license revocation or forced shutdown.

Regulatory, legal, and compliance exposure

Apart from suffering direct financial losses, organizations that fail to detect AI-generated fake IDs risk unintentionally violating AML regulations. In regulated industries such as banking, fintech, gaming, and crypto, this can result in regulatory fines, enforcement actions, loss of operating licenses, and long-term reputational damage.

Just recently, Australia’s communications regulator fined Optus AUD 826,320 (~USD 553K) after scammers were able to exploit a flaw in identity verification software to bypass checks and take control of customers’ mobile services. The breach allowed unauthorized access that facilitated identity theft and financial loss for users, and the regulator emphasized that robust ID protection was mandatory.

Under the EU’s GDPR, companies can be fined for failing to implement adequate technical and organizational measures to protect personal data, including identity controls. For example:

- Vodafone Germany was fined €45 million ($52.5 million) for insufficient monitoring and contract verification controls that led to fraud and third‑party access.

- S‑Pankki Oyj was fined €1.8 million ($2.1 million) after a flaw allowed unauthorized account access due to weak security measures.

- Orange Espagne was fined €1.2 million ($1.4 million) in a case where inadequate identity checks enabled SIM‑swap fraud, a common vector for identity and financial fraud.

As regulators become more aware of AI-driven identity fraud, expectations for stronger controls are rising. As an example, in the UK, the Economic Crime and Corporate Transparency Act 2023 introduced the corporate criminal liability offence of “failure to prevent fraud,” which holds companies accountable if they fail to prevent fraud committed by employees or agents acting on the organization’s behalf. While this law does not apply to external fraud—such as attackers using AI-generated fake IDs to bypass KYC—it illustrates the broader regulatory trend: businesses are expected to adopt comprehensive controls, risk monitoring, and verification procedures. Strengthening internal processes to prevent internal fraud often also helps mitigate the risk of external attacks, including identity fraud, making compliance both a legal and practical safeguard.

Eroding trust in digital identity verification

Legacy digital identity verification systems are built on a core assumption: that a document represents a real person, and that the person submitting it is its rightful owner. AI fake IDs directly challenge this assumption. When highly realistic synthetic documents can pass visual and automated checks, “real-looking” no longer guarantees authenticity.

This leads to a broader category of identity verification fraud, where the verification process itself becomes a target. As AI fake IDs improve, organizations have no choice but to reconsider reliance on document checks alone and adopt more robust, multi-layered verification strategies.

Mobile identity verification: SDK vs fake ID uploads

Why upload-based verification struggles against modern fraud

Many platforms still allow users to upload photos or scans of ID documents. This method is particularly vulnerable to AI fake IDs because:

- AI-generated images can appear camera-perfect and often bypass traditional image quality and authenticity checks designed for static photo uploads

- Metadata can be fabricated or stripped, removing signals that indicate whether an image was captured live on a real device

- No proof of real-time user presence is required.

Upload-only flows were designed for convenience, not adversarial AI environments.

The advantage of mobile SDK verification

Mobile identity verification SDKs require users to capture documents and selfies in real-time using their device's camera. This introduces friction for attackers and creates multiple data points for analysis, such as motion, focus changes, lighting shifts, device integrity, and geolocation signals.

Crucially, modern SDKs incorporate liveness detection, which verifies that the person submitting the selfie is physically present and responding in real time, and not a static photo, deepfake, or AI-generated video. This transforms identity checks from static image review into dynamic behavioral and biometric analysis, making it far more difficult for AI-generated fake IDs or synthetic identities to bypass verification.

Suggested read: Liveness Detection: A Complete Guide for Fraud Prevention and Compliance in 2025

Identity verification that wins: Layers that stop AI fake IDs

Document-based identity checks on their own are no longer sufficient to prevent modern fraud. As fake IDs become easier to create and harder to distinguish from genuine documents, relying on a single verification step creates critical security gaps. In 2026, effective fraud prevention requires a layered approach that makes fraud too costly, resource- and time-consuming, and in many cases practically infeasible.

Make fraud too costly for bad actors

Fraudsters and money launderers behave rationally. They look for the lowest-cost and lowest-friction targets with obsolete KYC, abandoning schemes when cost, time, or failure rates get too high, and scaling when a method is repeatable and cheap.

If bypassing KYC is inexpensive (e.g., $15 for a fake ID to pass one static ID check), a platform becomes attractive. But if KYC bypass requires multiple resources, coordination, and repeated failures, most attackers move on.

Effective fraud prevention implies a multi-layered approach that combines document verification with biometric matching, liveness detection, and behavioral analytics. By increasing the number and diversity of verification layers, organizations raise the cost, complexity, and risk for fraudsters. This makes large-scale abuse significantly harder for bad actors and simultaneously maintains a secure and compliant identity verification process.

Let’s break down the layers.

Liveness Detection and Face Matching

One of the most effective defenses against AI fake IDs is biometric liveness detection. This ensures that a real human is present during verification, not just a generated image.

Advanced systems analyze micro-movements, blinking patterns, depth cues, and challenge-response interactions that AI-generated images struggle to replicate consistently.

Document authenticity signals

Modern identity verification solutions inspect document-level features, including:

- Template consistency with issuing authorities

- Security element placement

- Font geometry and spacing

- Pixel-level anomaly detection

AI fake IDs often look convincing overall, but fail under forensic scrutiny.

Fraud detection training using samples

AI-based fraud detection systems are only as good as the data used to train them. To detect AI fake IDs, models must be trained on both genuine documents and known fraudulent samples, including:

- AI-generated IDs

- Edited real IDs

- Synthetic identity combinations

- Cross-border document mismatches

Continuous model updating

Fraud patterns evolve quickly. As AI generators improve, detection models must be continually retrained and updated to keep pace. Static, rule-based systems are no longer sufficient. Rather, there’s a need for adaptive machine learning pipelines.

Fraud prevention for AI deepfakes and digital forgeries

Beyond IDs: The deepfake problem

AI fake IDs are part of a broader issue: AI-generated digital forgeries. Voice deepfakes, synthetic selfies, and video impersonation attacks are increasingly used alongside fake documents to bypass security.

Can you spot a deepfake? Play the game: For Fake’s Sake

According to Sumsub’s 2025–2026 Identity Fraud Report, the avalanche of deepfakes has been a major fraud trend and is expected to continue in the coming years. In 2025, deepfakes ranked among the top five types of fraud. They are playing a central role in increasingly sophisticated, multi-layered fraud schemes that are harder to detect.

There are documented cases when video or voice deepfakes of politicians were used in investment scams, or deepfake videos of CEOs/CFOs convinced finance staff to urgently transfer large sums. In these scenarios, humans are often the weakest link—and so could basic liveness detection systems be. The situation changes, however, when advanced technology enters the game. Advanced AI-powered liveness detection combined with a multi-layered defense strategy significantly increases resilience against such attacks.

Fighting sophisticated schemes calls for the latest technology, cross-channel analysis, and defense—connecting biometric identity verification, device fingerprinting, behavioral biometrics, and transaction monitoring.

Are we witnessing a sophistication shift in fraud?

Yes. One of the most significant fraud trends of 2025 and the beginning of 2026 became the sophistication shift: fewer attacks, but more complex, efficient, and devastating. This has been largely fueled by AI, of course.

That sophistication now shows up across the entire fraud journey, not just at onboarding. So yes, making onboarding hard still matters—but it can’t be the only line of defense. If a bad actor slips through early checks or uses a legitimate user’s credentials to pass KYC, there are still other mechanisms to stop them later on, whether that’s through anomaly detection, behavioral signals, or transaction monitoring. The key is having protection at every level, so a single miss doesn’t turn into a major loss.

Defense-in-depth strategy

No single check can stop sophisticated AI fraud. The most resilient systems use layered defenses, combining:

- Real-time capture requirements

- Biometric verification

- Document forensics

- Behavioral analysis

- Risk-based decision engines

This approach reduces reliance on any one signal that AI might exploit.

Building future-proof identity systems: AI-powered anti-fraud and KYC

We at Sumsub always say that it’s crucial to fight fire with fire. Therefore, to defend against AI fake IDs and related fraud, organizations should:

- Move away from upload-only verification

- Adopt mobile SDK-based identity checks

- Invest in AI-powered fraud detection

- Regularly test systems against new attack vectors

Sticking to this strategy, organizations can both keep fraud at bay and demonstrate robust fraud controls that meet regulators’ AML/KYC standards, as these standards continue to be raised and tightened.

The rise of AI fake IDs is not a temporary problem—it is a structural shift in how fraud operates in the digital world. It’s just part of the puzzle: AI fakes are a piece of a larger shift in fraud sophistication. To fight back, organizations must act proactively, using advanced, multi-layered, smart protection.

FAQ

-

What is AI-generated ID fraud?

AI-generated ID fraud is the use of artificial intelligence to create realistic but fake identity documents or biometrics to bypass identity verification and commit fraud.

-

What is OnlyFake?

OnlyFake is an underground service that uses generative AI to produce counterfeit passports and driver’s licenses designed to pass online KYC checks.

-

What is synthetic identity fraud?

Synthetic identity fraud occurs when criminals combine real and fabricated personal data to create a new, fictitious identity that can pass verification systems.

-

How to prevent identity fraud?

Identity fraud can be prevented by using layered verification that combines document checks, biometric matching, liveness detection, and device and behavioral signals.

-

How can systems detect synthetic identity fraud?

Systems detect synthetic identity fraud by analyzing inconsistencies across identity data, biometrics, device fingerprints, behavior patterns, and historical fraud signals.

Relevant articles

- Article

- 1 week ago

- 10 min read

AI-powered romance scams are rising fast. Learn how dating fraud works and how platforms and users can protect themselves from online deception.

- Article

- 3 weeks ago

- 11 min read

Arbitrage in Sports Betting & Gambling in 2026. Learn how iGaming businesses detect arbers using KYC & fraud prevention tools.

What is Sumsub anyway?

Not everyone loves compliance—but we do. Sumsub helps businesses verify users, prevent fraud, and meet regulatory requirements anywhere in the world, without compromises. From neobanks to mobility apps, we make sure honest users get in, and bad actors stay out.