- Dec 02, 2025

- 10 min read

More Sophisticated—and More AI-Driven—Than Ever: Top Identity Fraud Trends to Watch in 2026

Learn about the new and growing fraud trends you need to be aware of and how to stay safe in 2026.

In 2025, AI went from an assistant to a hacker. In September this year, Anthropic discovered that a Chinese state-linked hacker group had hijacked its developer AI tool Claude Code not just for suggestions, but to actually launch and run a massive cyber-espionage campaign against about 30 big targets around the world. Astoundingly, the AI handled 80–90% of the hack automatically, weaving through reconnaissance, writing exploit code, harvesting credentials, installing backdoors, and exfiltrating data, with humans only intervening for a few key decisions.

AI agents going rogue and increasingly sophisticated fraud schemes are just two of the unsettling trends highlighted in Sumsub’s 2025–2026 Identity Fraud Report.

Gone are the days when we only had to worry about simple tricks, such as poorly edited or stolen IDs, and low-quality phishing emails. Now, criminals combine multiple, coordinated techniques, including highly sophisticated deepfakes, synthetic identities that blend real and fake information, hard-to-detect AI-generated documents to fool verification systems, and agents to breach systems. This is only expected to get worse in 2026. But the good news is that there are effective tools, too, to fight back.

Let’s explore some of the key fraud trends businesses need to be aware of in 2026, the steps regulators are taking to level the playing field, and how businesses can protect themselves.

Trend 1: Fraudsters step up their game

2025 saw sophisticated fraud almost triple, according to our research. In 2024, only about 10% of fraud attempts were advanced, but by 2025, this had surged to 28%—a 180% increase.

Interestingly, overall identity fraud rates have remained relatively stable in recent years. In 2023, 2.0% of verifications showed signs of identity fraud. This increased to 2.6% in 2024 before falling back to 2.2% in 2025.

When looked at together, these statistics show that, while overall levels of identity fraud have not changed much, there has been a move towards more advanced types of identity fraud.

This is all part of the ‘Sophistication Shift’, where fraudsters are increasingly deploying multi-step, coordinated schemes. Some stages of these complex operations are now supported by AI, but the sophistication comes from the overall structure and coordination, not AI alone.

Generative AI has enabled criminals to quickly and inexpensively create increasingly realistic fake IDs, documents, and other tools of fraud. This has effectively industrialized what was previously a skilled activity that only a relatively small number of malicious actors could employ.

What this means is that, while identity fraud may not be getting much more common than in previous years, it is becoming harder to detect and, therefore, more dangerous for businesses and their users.

Examples of sophisticated fraud include:

- Creating synthetic identities to pass verification checks, then using these accounts for money muling in money laundering

- Orchestrating fraud rings where multiple synthetic and stolen identities interact to reinforce each other’s legitimacy

- Combining high-fidelity AI-generated ID documents with deepfake videos to fool liveness checks

Trend 2: A tsunami of deepfakes

Deepfakes can take the form of images, video, and audio that are very realistic. Most often, they are able to fool people into believing they are genuine and can get past many traditional anti-fraud systems.

Try to spot a deepfake yourself with our For Fake’s Sake game or in Sumsub’s Anniversary Quiz.

According to our findings, in 2025, deepfakes ranked among the top five types of fraud, where individuals attempt to bypass verification checks to carry out fraudulent activities themselves, or first-party fraud. Some jurisdictions saw a drastic surge in deepfake attacks—for example, the Maldives experienced the largest increase, 2,100% year-on-year growth.

The biggest trend of 2025, likely to continue into 2026, is that deepfakes have become democratized and accessible to anyone with the right tools, with the UK government predicting that 8 million deepfakes would be shared in 2025 (up from 500,000 in 2023).

At the same time, they are playing a central role in increasingly sophisticated, multi-layered fraud schemes that are harder to detect.

One example of deepfakes being used for identity fraud was highlighted in our 2024 Identity Fraud Report. This involved an Arup employee who transferred US$25 million following instructions from senior management, where it later turned out that these directions came from fraudsters using deepfakes to mimic Arup’s senior management.

Trend 3: Synthetic identities are the cocktail of choice for first-party fraudsters

Synthetic identities were used in 1 in 5 (21%) of first-party frauds detected in 2025. These are composite identities created from a potent cocktail of real and fabricated data that can fool many KYC systems.

First-party fraud is where an individual attempts to pass verification checks in order to carry out fraudulent activity themselves. This is contrasted with third-party fraud, where criminals impersonate genuine users or compromise their accounts, then use those accounts to commit fraud.

First-party fraudsters will either use their own identity or a fake one when signing up to a platform for the purposes of fraud. Because they combine real, verifiable information (such as government-issued identity numbers) with false data (e.g., dates of birth), synthetic identities can be much harder to detect than entirely fake IDs, while also avoiding the need for criminals to expose their own identities.

Going forward, we anticipate that criminals will increasingly use clusters of synthetic identities interacting with each other to reinforce their credibility. AI-generated documents and videos will make these identities look more authentic than ever, making detection even more challenging.

Trend 4: AI outsmarts age checks and physical IDs

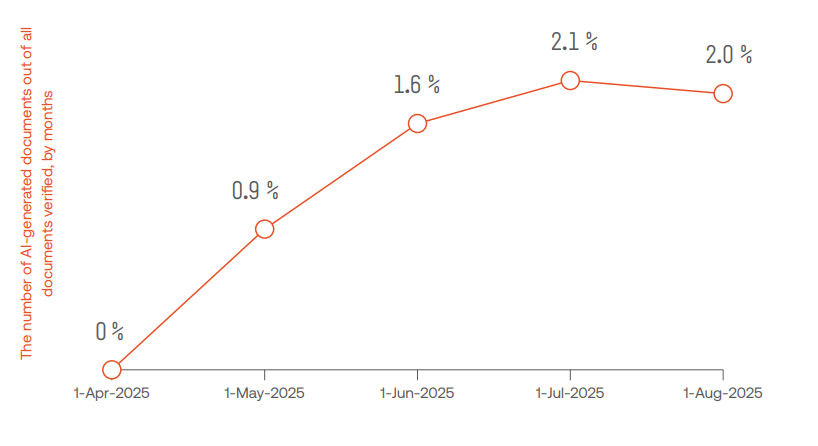

According to our data, 2% of all fake documents detected in 2025 were generated using tools such as ChatGPT, Grok, and Gemini. While this may seem like a small share, it represents just six months since we first spotted these types of documents in April 2025. Given the ongoing AI race among major tech companies, this trend could accelerate rapidly, potentially increasing the scale of the problem in the near future.

Growth in AI-generated documents

Various methods have been tried to combat the misuse of AI-generated documents, including having AI tools insert watermarks into the content they create and deploying new technology to detect AI-generated content. Unfortunately, it has been shown that scammers could bypass these measures with the right malware.

For businesses, this means that convincing fake documents are likely to become more prevalent as more people can create them. They may also enable much faster and higher volume attempts to beat verification checks that will be significantly harder to detect.

Online age verification has also been a hot topic in 2025, with the UK Government introducing mandatory age verification for age-restricted websites via the Online Safety Act. This has imposed a requirement for companies to verify the ages of their users on platforms, including social media, adult sites, and gaming sites. Australia has also gone down a similar route, requiring age-restricted social media platforms to take reasonable steps to prevent under-16s from creating accounts.

However, various methods, including AI-generated synthetic identities and deepfakes, can be used to bypass age verification checks. While this could undermine age checks, it may also familiarize individuals with AI-assisted techniques, potentially encouraging experimentation with these tools in other areas, including financial fraud.

Suggested read: Bypassing Facial Recognition—How to Detect Deepfakes and Other Fraud

Trend 5: Shift from fooling systems to hacking signals

It is not just identity data that fraudsters are targeting. In 2025, fraudsters went deeper by targeting the data pipelines and signals underlying identity checks, rather than just the identity artifacts themselves.

This means attacking the ‘telemetry layer’ within software and network systems, which is the element responsible for collecting, transmitting, and initial processing of user data.

Fraudsters’ tactics for tampering with the telemetry layer include using developer tools, incognito mode, privacy and hardened browser settings, and virtual machines. These can allow them to do things such as simulating device behavior, testing verification protocols in controlled environments, hiding browsing fingerprints, and giving the appearance of operating from a different device each time they attempt to pass a verification check.

Telemetry tampering can be incredibly dangerous for businesses targeted by fraudsters. This is because it allows criminals to interfere with the integrity of the behavioral and environmental signals on which modern anti-fraud systems depend. As a result, fraudsters may be able to bypass multiple safeguards simultaneously, even for highly advanced anti-fraud systems.

Check out Sumsub’s 2025-2026 Identity Fraud Report to deepdive into how fraudsters act and how businesses can protect themselves.

Trend 6: AI fraud agents: Foes, not friends

One of the most worrying developments in 2025 has been the emergence of AI fraud agents. These are autonomous systems capable of executing entire fraud operations with minimal human intervention.

Unlike traditional bots or scripts, these agents combine generative AI, automation frameworks, and reinforcement learning. This means they can generate fake IDs and documents on demand, interact convincingly with verification interfaces in real time, and learn from failed attempts to bypass verification systems so that they can refine their approach for future attempts.

One of the really scary things about AI fraud agents is how comprehensive they can be in their attempts to overcome verification processes. They can use multiple methods in a joined-up manner, for example, creating a synthetic persona, submitting a deepfake video, tampering with device telemetry, and reattempting verification with minor variations until it succeeds.

Currently, AI fraud agents are a relatively niche technology. They are primarily being used and talked about in black-market forums and closed research circles. However, analysts predict that in 2026, there will be a boom in AI-driven autonomous fraud. This could see coordinated fleets of agents conducting high-speed, multi-step attacks at scale, with the potential to utterly overwhelm traditional anti-fraud systems’ ability to cope.

Suggested read: Know Your Machines: AI Agents and the Rising Insider Threat in Banking and Crypto

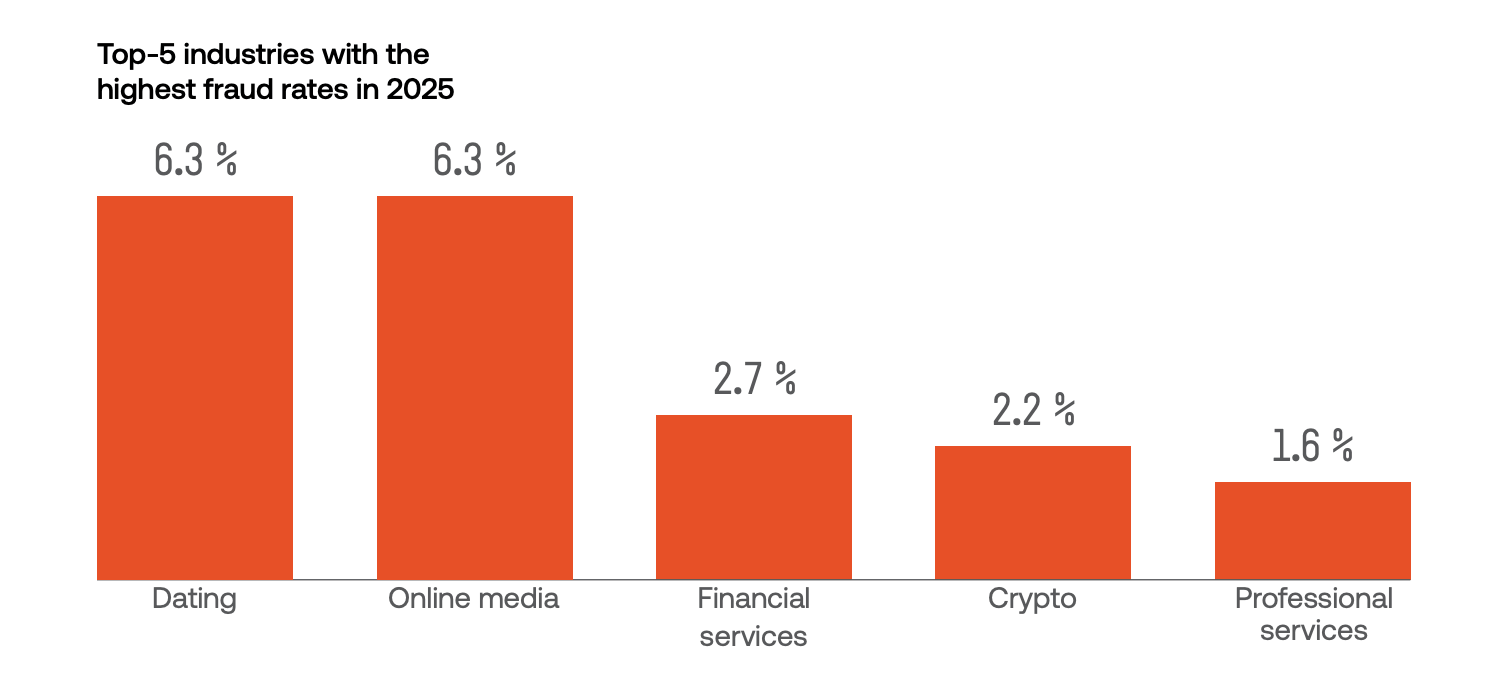

Trend 7: Unregulated industries still have a bull’s eye on their backs

Unregulated industries continue to be the most common targets of fraudsters, with online dating and media platforms being the most frequent choice for criminals seeking their prey.

Source: Sumsub’s 2025–2026 Identity Fraud Report.

In March 2025, over 6,000 people lost a combined £27million (~$36million) in a boiler-room scam operating out of Tbilisi, Georgia. Victims were targeted through social media deepfake videos and sham promotions, illustrating just how serious this type of manipulation can be.

There are likely a number of reasons why fraudsters prefer to target unregulated industries, including that they generally have less stringent anti-fraud measures compared to regulated industries, because there is no regulator to enforce standards.

Trend 8: North America and Europe are winning against fraudsters, but it’s a different story elsewhere

Different parts of the world have seen very different shifts in their levels of fraud during 2025. The US and Canada experienced a 14.6% decline in fraud rates, while Europe also saw lower levels of fraud, with a 5.5% drop.

Other regions were not so lucky, though. Fraud rates across Africa grew 9.3% while in Latin America and the Caribbean, they rose 13.3%. But it was the Asia-Pacific (APAC) and Middle East regions that had the worst year for fraud growth, at 16.4% and 19.8% respectively.

The top 15 countries most protected against fraud

Source: Sumsub’s 2025–2026 Identity Fraud Report.

The top 15 countries least protected against fraud

Source: Sumsub’s 2025–2026 Identity Fraud Report.

Regulators strike back: What has already been done to fight AI-generated fraud?

Governments around the world are taking steps to protect against the threats posed by sophisticated fraud techniques, including those powered by AI. Some of the most prominent measures include:

- The EU Artificial Intelligence Act. This places obligations on developers and operators of high-risk AI systems, such as those related to identity verification.

- The EU Digital Identity Wallet. This is intended to make identity verification more robust across the EU and will be available to every citizen, resident, and business in an EU member state by the end of 2026.

- The EU’s upcoming PSD3/PSR Payment Services framework. This aims to strengthen payment fraud prevention.

- The US’s new Digital Identity Guidelines (NIST SP 800-63-4). These are designed to strengthen identity checks through changes, including updated authentication and anti-spoofing standards.

- INTERPOL’s Project SynthWave. This project is working to train law enforcement officers across Southeast Asia to recognize and respond to deepfake evidence.

- “Failure to prevent fraud” is now a corporate liability offense in the UK. Under the Economic Crime and Corporate Transparency Act 2023, there is now a requirement for large organizations to prevent fraud even if they are not regulated.

These initiatives showcase the expected direction of travel for governments, regulators, and law enforcement in the coming years. Businesses should keep a close eye on these developments to ensure they understand their own regulatory obligations as well as the tools and protocols that exist to help in the fight against fraud.

Combatting fraud in 2026: Why businesses need to fight fire with fire

The changing face of fraud means that businesses will need to adapt and adopt new tactics to protect themselves and their users, while also meeting their regulatory compliance obligations. In many cases, the very technology that is empowering new, more sophisticated forms of fraud can also help detect and prevent criminal activity.

Steps businesses must take to defend against sophisticated fraud in 2026 include:

💡Recruit your own AI agents for verification checks

As legitimate users increasingly employ AI agents as digital assistants, businesses must be able to verify those agents and the people behind them. KYC for AI agents will be essential to assess the level of risks they pose and will need to be rerun regularly to ensure agents have not begun to act suspiciously.

But this kind of continuous KYC for AI agents will be very labor-intensive, and human anti-fraud professionals won’t be able to keep up. Organizations will need their own AI agents to perform continuous monitoring and reverification of digital assistants and their operators in a way that is robust and cost-effective.

💡Implement AI-driven fraud detection

Machine learning is now key to cutting through the noise when dealing with high volumes of data about users and their behavior. AI can rapidly analyze vast amounts of information and implement flexible rulesets based on individual users’ levels of risk and other factors.

AI-powered fraud detection can swiftly identify suspicious activity, as well as reduce false positives by ensuring overly strict rules are not applied to low-risk users. This can allow faster, more accurate anti-fraud checks, as well as supporting businesses that need to quickly process large-scale fraud attempts generated by AI tools.

💡Adopt advanced behavioral analytics to spot fraudsters

As fraudsters’ tools become harder to detect, such as synthetic identities and deepfakes, one area that can still reliably give them away is their behavior.

Behavioral analytics has long been used to detect fraud, but businesses must now shift to a multi-layered approach to stay ahead of the criminals. This looks at user behavior over time, including during onboarding and when undertaking transactions.

The challenge is that a vast amount of information must be processed for effective multi-layer behavioral analytics at scale. This is again somewhere that businesses will need to rely on AI systems, as they are the only way to analyze this volume of data fast enough and accurately enough to keep up in the modern world.

Key takeaways about 2026 fraud trends

- Fraudsters are becoming increasingly sophisticated, using a range of hard-to-detect methods, often powered by AI.

- This is expected to become an even bigger problem for anti-fraud efforts in 2026 and beyond.

- Criminals are combining multiple techniques, including deepfakes, synthetic identities, and high-quality AI-generated documents, to beat different aspects of anti-fraud systems.

- Digital age verification may be vulnerable to manipulation by AI-powered techniques, including using deepfakes to pass biometric scans. This could act as a gateway to more serious fraud if people become used to using AI to bypass age verification.

- Fraudsters are now attacking how verification data is collected and transmitted, as well as the data itself, to overcome modern anti-fraud methods.

- Anonymous AI agents are beginning to be used to carry out fraud operations with minimal human intervention, and this is expected to accelerate in 2026, creating the potential for high-speed, multi-step attacks at scale.

- Unregulated industries, including dating and social media platforms, continue to be the top targets for fraudsters.

- North America and Europe saw falling fraud rates in 2025, while fraud rates increased in Africa, Latin America and the Caribbean, the Asia-Pacific region, and the Middle East.

- Traditional anti-fraud methods are unlikely to be able to keep up with the complexity and volume of sophisticated fraud tactics.

- Regulators are responding with new laws and other initiatives to boost protections against modern fraud techniques

- Businesses will need to “fight fire with fire”, using sophisticated methods of their own, including tools such as AI agents and AI-powered behavioral analytics.

Stay ahead of fraud: Uncover the whole story on fraud trends and solutions in Sumsub’s Identity Fraud Report 2025-2026

What we’ve covered here really is just the tip of the iceberg. Check out Sumsub’s 2025–2026 Identity Fraud Report to get the full picture. In the Report, you’ll discover more fraud trends, a deeper forecast of what to look out for in the near future, and a winning fraud prevention strategy you can deploy to stay safe and meet regulatory expectations in 2026.

Relevant articles

- Article

- 3 weeks ago

- 11 min read

Arbitrage in Sports Betting & Gambling in 2026. Learn how iGaming businesses detect arbers using KYC & fraud prevention tools.

- Article

- 1 week ago

- 10 min read

AI-powered romance scams are rising fast. Learn how dating fraud works and how platforms and users can protect themselves from online deception.

What is Sumsub anyway?

Not everyone loves compliance—but we do. Sumsub helps businesses verify users, prevent fraud, and meet regulatory requirements anywhere in the world, without compromises. From neobanks to mobility apps, we make sure honest users get in, and bad actors stay out.