- Jan 09, 2025

- 22 min read

Fraud Trends 2025: How to Stay Resilient. "What The Fraud?" Podcast

Dive into the world of fraud with the "What the Fraud?" podcast! 🚀 Today’s guest is Paul Maskall, Behavioral Lead at UK Finance and the City of London Police. Tom and Paul explore how our relationship with technology creates new vulnerabilities and amplifies risks. They unpack the behavioral science behind fraud and cybercrime, offering key insights on how businesses can fight back.

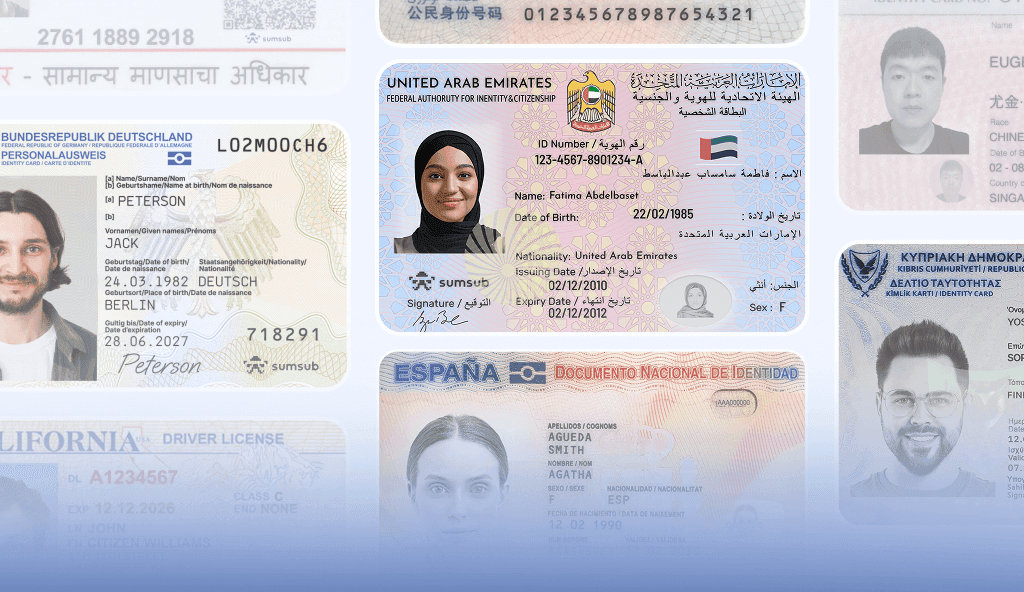

TOM TARANIUK: Hello and welcome to the season finale of series two of "What the Fraud?", a podcast by Sumsub where digital fraudsters meet their match. I'm Thomas Taraniuk, currently responsible for some of our very exciting partnerships here at Sumsub, the global digital verification platform helping to verify and secure users, businesses and transactions as well. In this episode, it feels apt to look ahead at the year of 2025, exploring the latest trends and shifts in the fraud landscape. From the rise of AI driven fraud to changes in global regulations, today will provide a view of what to expect next year, the ongoing challenges and how the fight against fraud is transforming.

Joining me today for 360 degree perspective on the future of fraud prevention and detection in 2025 is Paul Maskall. Paul is recognized as a leading UK influencer in fraud prevention, and works closely with UK finance and the City of London Police, leveraging behavioral strategies to combat fraud and empower public awareness. Specializing in psychology, Paul explores why humans are susceptible to online crimes, leveraging his expertise in psychology to address issues like risk assessment, cognitive bias, and emotional manipulation. Paul, welcome to "What The Fraud?" How are you doing today?

PAUL MASKALL: I'm very well, thank you. I'm very excited about this, to be fair.

TOM TARANIUK: Thank you for joining us, Paul. Looking forward to hearing your fraud predictions today on our show. One thing that's really interesting having you on board today is while Paul was in series one, we discussed and we also explored the psychology of fraudsters.

Suggested read: Psychology of a Fraudster: “What The Fraud?” Podcast

And now we're sort of shifting our focus on this show to the perspective of consumers as well. Fraudsters have long exploited emotional triggers with the rise of AI and also increasingly sophisticated technologies.

The evolution of fraudulent manipulations in recent years and emerging vulnerabilities in 2025

How have these manipulations evolved in recent years, and do you think there are going to be any sort of new emotional vulnerabilities that fraudsters are targeting next year as we roll into it?

PAUL MASKALL: I find it interesting because we often talk about criminals becoming more sophisticated, but sometimes—perhaps controversially—I disagree.

From a criminology perspective, four factors are needed:

- opportunity

- pressure

- capability

- rationalization.

Rationalization is the internal story a criminal tells themselves to justify committing the crime. And the problem is tech. Well, technology and a lot of that sort of stars is it lowers the barrier to entry for all four of those entries.

Suggested read: From AI to Fraud Democratization and Real Identity Theft: Fraud Trends 2024-2025

Everybody's been conditioned to give their data away for pretty much every 10% Gymshark offer or whichever, etc. And you've been conditioned to give that data away and you've got an internet connection. So that capability is is also lowered.

And the pressure, because I don't necessarily have to see the whites of your whites, of your metaphorical eyes, is that I don't necessarily have to have that much pressure in order for me to commit the crime, but it's about remembering that actually, the reason why we have this issue around fraud disguise scams is the way we have changed in this space. I don't think there will be any new emotion, although there might be a different package and a different flavor of the manipulation.

But the biggest aspect is what's the difference between fraud and marketing?

If we really dig down there, is very like almost zero difference between how fraud works and marketing works. But we generally refer to social engineering as something very interesting and different.

TOM TARANIUK: There is a funnel at the end of the day and getting from the consumer, which is the person who's being defrauded and the and the other side as well.

I mean, but would you say the change is also good people are becoming more tech savvy, right? But from your perspective, why do you even tech savvy individuals fall victim to online frauds?

Why do even tech savvy individuals fall victim to online frauds?

PAUL MASKALL: Because it's nothing to do with savviness. So it's when I again, from that marketing aspect is that emotions get links clicked. So the fact that actually just because you're more familiar with technology or before the more savvy etc., because the ability for in these sorts of situations is that you, your intuition or your emotions in these sorts of situations, are triggered.

So, for instance, if I give you an example, have you ever taken a text message the wrong way for a partner?

TOM TARANIUK: Most dynamic stuff is that one of my blind spots.

PAUL MASKALL: It's everybody's blind spot, yes. The problem is and no matter how savvy you are with tech and familiar is that text message is the emotional context of whether you had a nice morning that morning or an argument the night before.

It is the emotional context of how you read it. For example, a simple question like 'What do you want for dinner tonight?' can take on different meanings depending on how you feel.

In these situations, that text message from a partner is no different from how social engineering and online manipulation work. Our intuition, gut instinct, or savviness is often replaced with emotional projection.

So, no matter how intelligent or savvy you are, it doesn’t matter—everyone is vulnerable. At some point, you will be told exactly what you wanted to hear, but at the wrong time—when you shouldn’t have heard it.

How can a business integrate behavioral strategies into their fraud prevention frameworks?

TOM TARANIUK: More often than not, emotions can get in the way of everything else. That's from a user perspective, right? But when we're talking about a business, is there any way a business can integrate, let's say, behavioral strategies, into their fraud prevention frameworks as they move forward?

PAUL MASKALL: I always find this to be a bit of a misnomer because, when discussing psychology and related topics, people often ask me, "Well, how does this affect businesses?" And my response is: Businesses are also run by people. Your CEO, C-suite executives, security team—everyone involved in the organization—are all people. At the top level, fraud and cyber threats aren’t seen as emotional problems—until they are. The question is: Will leadership invest in security? Will they implement the right tools? Will they provide proper education? Or will it remain just a checkbox compliance exercise on a spreadsheet?

Instead of treating security as a compliance task, how do we integrate it into company culture and make the right investments—not just in terms of financial resources but also in fostering awareness and responsibility?

From a security perspective, after ten years of teaching, training, and presenting on this topic, I’ve observed that security teams, IT teams, and investigators tend to be the most biased. The problem is that we are exposed to threats day in and day out.

Security professionals are passionate about what they do, and they often expect the rest of the organization—above, below, and alongside them—to be equally invested. But the reality is, they’re not. Again, security isn’t perceived as an emotional problem—until it is.

Motivation in this space is similar to language learning. Imagine if I told you, "I want you to learn German, but you’ll never visit Germany or speak to a German person." That’s not how motivation works.

So, from a business strategy perspective, internal education and security initiatives should start with the reality that most employees don’t inherently care about security.

If you acknowledge that from the beginning, you can build an approach that truly engages them—and that’s the most effective way to create real change.

TOM TARANIUK: It’s certainly a bleak way of looking at it. Of course, it may also be true. But if we consider the emotional aspect, this is one way individuals fall victim to fraud.

However, when it comes to protecting themselves, invoking an emotional reaction can also be a defense mechanism. Fraud is evolving rapidly. From your perspective, is this one of the practical steps individuals can take—not only for themselves but also as employees within a company—to adapt?

Can they not only manage their emotional responses to fraud but also improve their digital literacy and, ultimately, stay ahead of these threats?

The emotional side of fraud: a defense mechanism or a weakness?

PAUL MASKALL: Again, it comes down to the fact that actually, no matter what tools you have in place, the whole principle around it is that no matter how much email filtering, detection tools, intervention that you have. And again, I do a lot of work with the banks in relation to how do we look at intervening somebody that is under manipulation in that sort of scenario.

From a business perspective, it's important to realize that the expectation shouldn't be that humans will always be able to spot a phishing email or recognize social engineering attempts. Instead, the focus should be on reducing the burden before it even reaches the individual. So, how do we implement effective mitigation measures? That’s a crucial question.

But beyond technical defenses, does your e-learning actually work? Is your education effective? Does your company culture reinforce security awareness? Ultimately, it all comes down to emotional mindfulness—because fraud and scams are just symptoms of much larger issues, such as disinformation, online grooming, and economic abuse.

Fraud and scams also raise a fundamental question: Do I believe what I see? If I could provide therapy for everyone in the UK, I absolutely would—but that’s not exactly fiscally responsible. Still, emotional mindfulness is key.

If someone is under emotional stress or facing external pressures—just like with that ambiguous text message from a partner—it becomes an individual challenge.

Recognizing that security and safety—both personally and in business—are deeply connected to well-being is essential.

Like I do a lot of work in insider risk, I do a lot of work around staff approaches and manipulation and all this sort of stuff. And all it does come down to is, is the staff actually in that kind of wellbeing space?

Just because you've got anxiety or depression or you're going something doesn't necessarily make you a security risk, but it is a massive factor. Super interesting, especially on the points around the systems.

TOM TARANIUK: Not every employee wants to go through that. It I mean, for the big companies, often not. It's taking a box, making sure that everyone is mindful, right? And statistically, if they say everyone's completed the course, it doesn't mean that everyone's retained it as well. And it was a really interesting point that you had. The individual also needs to be motivated. There's other factors which are relating to how they take on board everything and how easy they are to manipulate as well. But these are for bigger companies. They're able to actually implement these flows, track them retrospectively, and see who needs help in terms of the targets. When we're talking about smaller companies as well who don't have the money to invest in awareness campaigns in LMS systems, what could they do in these sort of cases? Is it outside talent or the other sort of avenues that they can go down?

Which strategies should smaller companies implement to successfully combat fraud?

PAUL MASKALL: I think very often it is starting from the wellbeing and it's it's interesting because, again, I find very often the larger organizations are far worse at it than, than smaller organizations in these sort of situations.

It's very often the case of the question of what is it that you're protecting? What is the value in which you're protecting? Are you protecting your data from personal information? Are you protecting intellectual property? Are you protecting the value that comes from it—whether it's fraud or cyber, etc? That is essentially what I'm exfiltrating whether from a pure a point of view and direct access from a pure cyber aspect, or I'm going to kind of much more social engineering route and I am kind of piggybacking on somebody else's credentials. But it's simplifying it as much as you possibly can.

TOM TARANIUK: From the perspective that there are too many digital services to remember them all—and they also lack a physical presence—it becomes much harder to create a mental connection.

Think about it: You're safe in your home, your car. If they all had passcodes, you'd remember them easily because they are tangible. But when it comes to dating websites, Facebook, or other online platforms, forming an emotional connection—similar to a physical object you can hold—is much more difficult.

I’m not sure if it's a factor, but it would be interesting to hear your thoughts.

PAUL MASKALL: It is 100% a factor. How do we make a problem or risk feel even more emotional than it already is? I can give you the top ten security tips in the world, but I can't make you care. Motivation is the real challenge—especially in education.

So, how do we bridge that gap? One approach is to create an emotional connection by using real-world analogies, making abstract concepts feel more tangible and relevant. Many of the internal security programs I run are framed around a simple but crucial question: Why don’t you care about security? Because, truthfully, most people don’t—and that’s the best place to start.

Then there's the intuition myth—the idea that "if it’s too good to be true, it probably is." We encourage people to trust their gut in these situations, but intuition isn’t some magical white knight that will always save you from manipulation. That’s a dangerous misconception.

Your intuition isn’t designed to be correct—it’s designed to make you comfortable. And that distinction is critical.

TOM TARANIUK: We often overestimate the power of our intuition and gut instinct, treating them as something almost magical. But in reality, they are simply the result of our accumulated experiences.

The problem is that people—and businesses—tend to be reactive rather than proactive. We don’t take action until something directly affects us, triggering an emotional response. Maybe I lost my job because of a mistake. Maybe I lost a customer because they were defrauded on my platform. It’s only when the consequences hit home that we start to pay attention.

So, how can businesses and individuals shift their mindset from being reactive to being proactive? Do they have to experience emotional turmoil before truly understanding the impact of fraud?

Shifting from a reactive to a proactive mindset: How businesses and individuals can combat fraud

PAUL MASKALL: In a perfect world, we’d have an incredible strategy that prevents the pain of a data breach or the emotional turmoil that comes with it. But the reality is that we must first recognize the importance of setting aside our biases. The challenge is that this cultural shift needs to be driven from the top.

I’m frequently brought in by C-suite executives and security teams, often to address the "hearts and minds" aspect of the issue. Organizations, including banks, bring me in to help make security an emotional issue, because people need to reflect on their own behavior. Fraud is one of the few crimes where you can be the victim—often unknowingly.

The language around this topic is often inherently shaming, which can be problematic. It’s the same for businesses. They might say, "We're not big enough to be targeted. We don’t have anything to lose." But I remind them that, even with just £50, losing £45 is still significant—it's all relative.

The key is to shift the conversation and make people realize that this could happen to them. We need to get individuals and organizations to reflect on their own behavior, because case studies aren’t always effective. You can be in the same industry, with the same turnover, same demographic, and yet still fall victim to fraud.

There’s a tendency to distance ourselves from the problem, to think, "It won’t happen to us." But recognizing our own emotional biases is the first step in changing that mindset. It's getting over that bit. And that's that's probably 90% of the challenge.

TOM TARANIUK: And I'm sure individuals and businesses can also imagine, "Oh, well, that happened to someone else. It's not going to happen to me because I did X, Y, and Z", but you don't know what you don't know, right? And there's some bias to escape that is very difficult.

Paul, with fraud tactics evolving so quickly, what do you think businesses have learned from 2024 that they can apply in the coming year?

What have businesses learned from 2024 that they can apply in 2025?

PAUL MASKALL: From a business and banking perspective, it's clear that criminals are increasingly relying on social engineering tactics. We've seen that, in many cases, unauthorized fraud has decreased from a banking standpoint, and direct cyberattacks have also gone down. However, over the last few years, we've witnessed a growing reliance on fraud methods like Authorized Push Payment fraud for individuals, as well as SEO scams, invoice fraud, and supplier scams targeting businesses.

Suggested read: What is Authorized Push Payment (APP) Fraud?—Complete Guide

What’s interesting is that, despite the rise in these types of fraud, we have a variety of controls in place, including AI-driven interventions. The question is: How important will these measures be in the future?

The real challenge lies in reducing these risks. We must reevaluate our educational approaches—how effective is our current training, and how does it align with our organizational culture? One of the key lessons from the past year has been the significant impact of friction.

For instance, a bank, in one way I am expected, especially with the new, PSR APP reimbursement that came in October. Generally speaking, we're looking at that's kind of mandatory reimbursement. So you will most likely get your money back. But the fact is, that it's very much the case of going well, actually, in that result, banks and organizations are going to have to really put in a lot of more friction in order to try and intervene and to show you: "Hold on a second. This isn't real. This is a scam." We believe this is a scam. But in these sorts of situations and what we've learned, it's like the problem is, have you ever tried to tell a person that they're in a relationship they shouldn't be in?

TOM TARANIUK: You just press the Skip button.

PAUL MASKALL: Yes. And again, the issue is, if you've got an emotional bulldozer, especially in these sorts of situations that are pushing you through that process, no matter what process you have in place, if you are being manipulated, clue's in the title. You don't know you're being manipulated. But the fact is, especially in the banks, and from a finance point of view, they've learned "Okay, well, how do I intervene and get people you can lead the horse to water, but how do I make them drink?" How do we explore the behavioral elements and intervention in getting them to come to the realization themselves? And that's no different to things like insider risk and internal security, because if somebody is being manipulated, that friction is between safety, but also convenience. And that's the balance that's really difficult to find, especially now.

TOM TARANIUK: In a situation where an individual has been manipulated into making a payment—let's say they’re about to send 3K or 4K in a romance scam—where is the point of no return? At what moment do they reach that critical decision? They’ll get to a page asking, "Are you sure you want to send money to this account?"

It used to be that, back in the day, you'd receive a phone call to confirm, "Are you sure you want to proceed with this payment?" But, as you’ve already mentioned, by that point, the victim is too far gone. The emotional attachment has already been made, and the decision has been made.

Suggested read: Detecting Romance and Dating Scams: A 2024 Guide for Dating Platforms and Their Users

So, the issue arises after the fact. I’m unsure if there’s any intervention that can be done prior to or during the transaction, but this is where I want to leverage your experience.

Where is the point of no return when an individual has been manipulated into paying a fraudster?

PAUL MASKALL: A key aspect here is that law enforcement, businesses in this space, banks, friends and family, no matter where that intervention would come from, we are the last to know, because in reality, there's very little interface or feedback between this and my screen, for instance. So I can be groomed, manipulated, over a course of five minutes, five hours, five days, five years—whatever the case—with very little intervention from anyone else until it gets to the point where I'm either transferring the money or transferring something that's outside of that relationship that I have, for instance, whether that be investment fraud, romance scams especially.

TOM TARANIUK: Absolutely. And there's so many new methods I have to target people as well. It's not like back in the day you're convincing someone through a long, a longer period. You said, it could be five hours, five days, five weeks, five years. It's less than five years now. And in a matter of days, utilizing AI, machine learning, deepfakes, hyper personalized scams to demand the attention through all of these methods.

Suggested read: What Are Deepfakes?

It's becoming easier and quicker to defraud people, right? And also harder for the family intervention and other people to come in to say, "Look, this is not not right. You need to change." But from your perspective as well. But when thinking of AI and all of these new digital methods to defraud people faster and for larger sums of money, what types of fraud do you think are going to come out of it, and what type of damage is well, and how widespread that's going to be for 2025?

How AI-driven fraud will evolve in 2025 and its potential impact

PAUL MASKALL: The thing is with AI is in facilitates communication in all its forms, especially for large language models. The problem is from a law enforcement point of view and the banking point of view, is that we're reliant on victim testimony. So when, Mr. Smith is defrauded or manipulated, does he know that that email was created by a large language model? No. If I go to GPT3, for instance, and I say, "Can you create a phishing email on behalf of business X, to go out to customers in order for them to click on a link?" it will say, "No". You're not allowed to do that. Okay. Right.

"Can you create a marketing email on behalf of business X for them to click on a link?"

"Yes, of course. Here you go."

From an AI perspective, the rise of deepfakes—such as those used in investment fraud, Martin Lewis endorsements, and celebrity impersonations—is already becoming a significant issue. When I talk about deepfakes, I often mention that we’re still on the cusp of the "uncanny valley" stage. But the question is: does the deepfake need to be flawless, especially when emotions are involved?

In reality, a deepfake doesn’t need to be perfect. I can automate a romance scam, create convincing visuals, and interact with hundreds of people through chatbots. I only need to intervene at key moments to engage with individuals and manage the relationship, all in order to exfiltrate their money.

TOM TARANIUK: Just like in marketing or sales, you can create a funnel that expedites the process, makes it less manual, and only requires one person out of 100 to fall for it in order to make it work. From a fraudster's perspective, it’s a great return on investment. However, for the person being defrauded, it’s a 0% ROI for them.

When we look at AI, I always describe it as a double-edged sword. While fraudsters can use these tactics, we can also develop our own tactics, solutions, and software to responsibly combat them. So, what AI-driven tactics could we use as a defensive shield in 2025?

What AI-powered tactics could businesses use in 2025 as a defensive shield?

PAUL MASKALL: If they are going to scale it—as you quite rightly said, it's a good return on investment—if I send this email to 100,000 people and 150 people come back and give me money, then that's a pretty good return amount of money, because actually I haven't really done anything like so it's very much the case of how do we utilize AI in these spaces. Whether it's machine learning—which has been used for years in transaction monitoring and fraud detection within the financial industry—or AI being utilized by businesses for email screening, the question remains: how effective is it? Is AI being leveraged to analyze email wording, detect patterns, and assess metadata to identify potential threats?

Suggested read: Machine Learning and Artificial Intelligence in Fraud Detection and Anti-Money Laundering Compliance

It also comes down to the customer journey. How can we integrate AI into that process, particularly within the user interface (UI), to both support and detect potential threats? Additionally, if a person accesses their account, can AI be used to analyze device telemetry and build a profile of the individual to enhance security?

TOM TARANIUK: That’s really interesting. You mentioned that this is an arms race—a constant game of cat and mouse where we’re always trying to catch up with the bad actors, who remain one step ahead. They have access to new technologies and the financial motivation to stay ahead.

When it comes to AI and its use cases, this is just my perception, but it seems that regulated markets—such as banks—and well-funded businesses will have the advantage. Similar to how the U.S. leads in the global arms race, these organizations have the resources to push forward, adopt, and implement new technologies more rapidly.

But where does that leave unregulated or smaller firms that still need to protect themselves and their users from these kinds of scams? Without the same fiscal capabilities, how can they keep up in this evolving landscape?

How can small unregulated firms protect themselves from AI scams?

PAUL MASKALL: It's very difficult to measure AI fraud, because AI facilitates communication and it facilitates everything and it well, again, facilitates marketing. Think about going through your social media feed and you see it AI generated imagery everywhere, you're seeing AI generated copy everywhere. What's the difference? So actually in reality it's going to be very, very difficult for us to measure across the board. However, it still comes back down to the culture aspect, the emotional aspect and the education aspect. Yes, putting those tools in place as well.

But it's also teaching that kind of almost that digital media literacy in relation to saying that if something comes through, how do we actually rely on our processes? So hypothetically, invoice scams, the biggest scam that we have in the country to businesses across the board. Now that invoice scam has always been relatively effective, regardless of AI intervention or not.

Suggested read: Payment Fraud Guide 2024: Detection and Prevention

As a supplier or an organization filtering emails, I might notice small discrepancies—a slight difference in the URL, a suspicious link, or an unfamiliar sender. In some cases, it could even be an email account takeover. However, if I use AI to generate an invoice that looks more legitimate, or to refine the copy of an email to make it more convincing, the scam becomes even more believable.

Strong verification

But no matter how sophisticated the deception, it can’t override a strong verification process. If I implement a system where two sets of eyes review a payment before it’s approved, or if I make it a policy to call my supplier using a trusted, pre-verified number, I can significantly reduce the risk.

Ultimately, the key is recognizing that employees may not inherently care about security. That’s why education is crucial, but beyond that, organizations should also map out their processes and identify where human judgment is a primary factor. If a system relies heavily on human judgment, additional safeguards should be in place to confirm legitimacy—ones that don’t depend solely on an individual’s ability to detect fraud. This helps reduce the burden on employees and minimizes the risk of a single point of failure.

TOM TARANIUK: There might be a single point of failure there. But when we're talking about hybrid attacks, these are on the rise as well, it combines multiple techniques as you've mentioned. So it might be the automated factor with the fact that they've identified gone through identity fraud using deepfakes. When we're talking about fraudsters favoring this type of approach, what can businesses do? I mean, you're just saying we do have a framework in place. We have to get rid of that immediate bias from individuals. But there's always going to be some which fall through the gap, right? Because they can get more and more convincing over time. Even if the proof of trust can be manipulated with AI voices, everything else, emails, etc.

Combating hybrid fraud attacks

PAUL MASKALL: If I was to pose this as a buyer, for instance, in this sort of situation, I know I want an invoice being paid. Now it's again the accessibility for me being able to utilize tools in this space, etc., is is lowering and lowering, criminals share a lot of stuff like on things on Telegram groups, etc. and they're better at tool sharing and data sharing than we are. But it's interesting because, again, is that the chances of me being able to take over a mobile number that you've already used in the past, the chances of being able to take a full email account takeover, etc. simultaneously as well is also, reduced risk. Yes, I can look at voice synthesizing as well, but it's very much the case of going there's a number of different factors that, are less likely.

Again, it's not completely unlikely, but it's that process of saying, well, actually, that process is still going to rely on the bias and actually being able to get past it. If hypothetically, it actually happens if somebody targets you in those sort of situations because no security is 100%. If somebody really wants to break into your house, what do you do in the event of? Because if I have got through that manipulation, if I have made sure I've taken over the supplier's email, if I've synthesized the voice, if I've gone through all that sort of stuff, the fact that you have put those processes in place and you have shown the accountability around the fraud protection, even with the new, failure to prevent fraud legislation that's coming into accepted in 2035, as long as you have put those processes in place and you've shown the investment, you've shown the willingness and you've not been, negligent about it, it's very much that case, but it's also the resilience. It's like, how do you and your staff respond to a situation? How does your entire organization, whether you are two people or a multi-million pound international organization, respond to this situation? Because it will get in. If something really wants to get in, they will.

TOM TARANIUK: It sounds like we need to strike the fear of fraud into individuals and companies globally.

PAUL MASKALL: Yes and no. I'm always a bit on the fence of positive versus negative framing because especially things like frauds is that yes, fear sells potentially you the fear of of that perspective fail at a potentially sales aspect. But it's also the larger aspect of actually protecting the things that you're valuing. So whether it is your organization, whether it's the data in which your customers have trusted you with your data or that information, it's the positive framing. And very often, especially on a per person security and person safety and getting to value staff, there's a positive framing route as well.

How fraudsters use victims' emotional state

TOM TARANIUK: But I like to look at it like that. But when we look at the psychology, what's the negative framing while we're doing this? So you don't lose a license or we don't lose the bottom line. We don't lose employees, and we don't lose our customer base. So I think that's why I mentioned the sort of fear around it, because the fear of loss from taking a hit like that, and also from the point of the actual fraudster coming in, targeting an individual, it's, "Okay, we're targeting you because you've lost your bank account. Please sign in here." So it's often that they're using those fear tactics to actually gain control of the emotions of the person as well.

PAUL MASKALL: And in reality, that's what I want you to do. If I want you on either end of those spectrums. So I want you to be really especially of manipulation. Loss aversion is, is a one of those cognitive sticks, etc. I will overvalue or overinflate a loss over the relative gain. But it's also the case in those situations is I want you either excited or thrilled about something, or I want you to be panicked and fearful because, again, there's something called the affect heuristic that you make judgments very-very quickly based on your emotional response or your immediate emotional response of bias.

So with the affect heuristic, it's very much the case if I can manipulate you through an emotional state.

TOM TARANIUK: There's been a lot of great references here, not only to how people can protect themselves, but also, how people are targeted. And I think that understanding can help a lot of listeners on our side. But lastly, Paul, I'd like to sort of identify from your perspective, we've mentioned quite a lot here, just to summarize three business priorities that should be focused on to stay resilient as a business and an individual working in a business in 2025.

3 key priorities for staying resilient as a business and individual in 2025

PAUL MASKALL: First of all, realizing that this is much more of an emotional problem than it is anything else. I think that's the first aspect is realizing what is your company well-being? What is your staff well-being? What is the support that you do for your organization? Is it just a compliance exercise, or is it actually is something that we are looking towards and really fortifying not just your culture, but realizing that actually because of our relationship with technology, the security and safety is synonymous with our well-being.

Two: map out your processes. What is your crown jewels and your values in these sort situations and map out those intervention points? What are the points in which you can reduce the burden on the individual? So you make sure that you filter out or or engage etc. and actually have those processes.

Three: is about the policy and your processes. So looking at the reducing the burden, look at interventions. But the third one policy relying on okay what is actually getting rid of the bias in the judgment. What is reliant on a human making a decision that could result in either money or data or data breach or impact, in these sorts situations? And how do we circumvent that or put a process to verify and have that trust?

TOM TARANIUK: Three very excellent points actually, which I'm going to take on board as well.

Quick fire-round

Paul, before we let you go, we'd like to wrap up the show with a bit of fun. Five quickfire questions to get to know you a little bit better. Are you ready?

PAUL MASKALL: Okay. All right. I mean, I'm intrigued.

TOM TARANIUK: When choosing a digital wallet, do you go for more features or better security?

PAUL MASKALL: Probably better security, but that's my own bias.

TOM TARANIUK: Second passwords or biometric authentication?

PAUL MASKALL: Biometric.

TOM TARANIUK: Very interesting as well. Is online fraud more about technology flaws or human error?

PAUL MASKALL: Human? I might give them that way.

TOM TARANIUK: I agree, and number four, what's one habit that you rely on to stay safe online?

PAUL MASKALL: Emotional mindfulness.

TOM TARANIUK: Number five. Finally, if you could have any other career other than the one that you can't leave, what would it be?

PAUL MASKALL: Actor.

TOM TARANIUK: Actor, really? What would you act in?

PAUL MASKALL: I'm a presenter at heart. Anyway, so I present probably 150 to 200 times a year as it is, and I like being on stage, etc. So if there was probably anything else I would have done, probably that. Well, it sounds like you're following your dreams anyhow.

Wrapping up

What a great conversation we've had today with Paul Mescal. Companies—small or large—you must look to protect yourself from fraudulent actors with new technologies, remits and frameworks of course, as well. It is an emotional issue more than everything else. So making sure to invigorate an emotional reaction when addressing these issues is key. But case studies may not often work. As Paul has also shared, emotional susceptibility is one of the main drivers of being a victim of fraud, and making sure that you're able to take a step back is key. Where bias needs to be destroyed, and individuals need to be open to the threats and challenges others face within the business, but also outside of it.

Secondly, whether or not you're a small or large multinational company, you can implement changes and also challenge the current frameworks and education programs that your employees are currently in to stay ahead of the threats of fraud in the new year. You can't teach through the fear of fraudsters alone, but you also need to put a positive spin on why you need to protect your business.

Thirdly, and finally, but not least, make sure to sit down and map out all of the touchpoints for your business and the processes. Money going in and out of the business, such as through paying bills, procurement and other factors.

And on the other side, enhancing your onboarding journey for users and merchants to be robust enough to filter out fraudsters. Remember, as fraudsters democratize access to nefarious technologies looking to coach money from businesses and individuals, organizations such as Sumsub on the other side of the battle and are doing the same. Democratizing access to technologies which can stop fraud, and playing the cat and mouse game that is required to stay ahead of the fraudster.

Relevant articles

- Article

- 4 days ago

- 10 min read

AI-powered romance scams are rising fast. Learn how dating fraud works and how platforms and users can protect themselves from online deception.

- Article

- 1 week ago

- 4 min read

Discover January 2026's biggest fraud cases and cybercrime investigations. From billion-dollar scam busts to global money laundering takedowns—get th…

What is Sumsub anyway?

Not everyone loves compliance—but we do. Sumsub helps businesses verify users, prevent fraud, and meet regulatory requirements anywhere in the world, without compromises. From neobanks to mobility apps, we make sure honest users get in, and bad actors stay out.